Video

- Concept of the week: K8s architecture: Containers, Pods, and kubelets (867s)

- PR of the week: PR 11 Merge contributor version of k8s charts with the community version (3320s)

- Demo: Running the Trino charts with kubectl (3462s)

Audio

This is the first episode in a series where we cover the basics and just enough advanced Kubernetes features and information to understand how to deploy Trino on Kubernetes.

Concept of the week: K8s architecture: Containers, Pods, and kubelets

For this concept of the week, we want to provide you a minimalistic overview of what you need to know about Kubernetes to deploy Trino to a cluster.

-

Why Kubernetes? Kubernetes is a container orchestration platform that allows you to indicate how to manage containers declaritively using yaml configuration files. This definition can be tricky to understand if you don’t have proper context. To make sure nobody is left behind, it is useful to cover what containers are:

-

The traditional way to deploy an application is to take the compiled binary of that application and run it directly on computer hardware that has an operating system to run the application on it. This works, but has a lot of dependency on the underlying hardware and operating system to be functional and requires multiple applications to share the same resources. If one of the applications fails and causes any of the shared resources to crash, it could cause all applications to fail on that machine.

-

To remove these dependencies, engineers created virtual machines (VMs) by using a VM manager called the hypervisor that emulate hardware environments to host other operating systems. This is a big step forward as now each application can be isolated, but it comes at a great cost. Each virtual machine hosts an entire operating system and is resource intensive and slow.

-

Containers are the newest type of deployment. Containers enable a logical isolation of resources while still physically running on shared resources. All resources created in the hardware and operating systems exist on the host system. The isolation restricts any interference from other processes. Containers achieve the goals of virtualization without sacrificing much performance or efficiency.

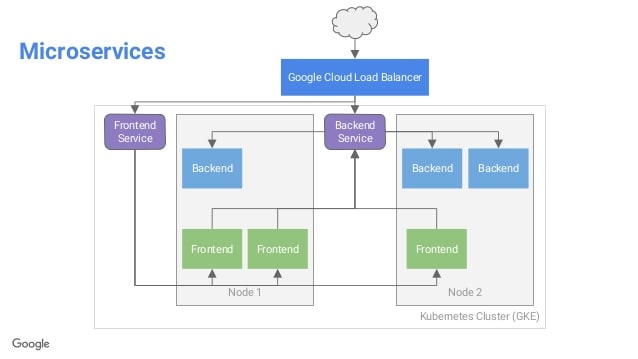

Source: https://kubernetes.io/docs/concepts/overview/what-is-kubernetes/- Containerization simplified a trend in service oriented architecture called microservices. Microservices deploy loosely coupled and modular applications rather than all-encompassing monolithic applications. With containers, these applications can be deployed and scaled up quickly across various virtual and physical machines without affecting other applications on the same machine. This is great, but results in new complexities. Some examples are the need for new approaches to monitoring the health of applications, scaling the applications as requests grow and diminish, redeploying crashed applications, and networking the applications together. In summary, all of these activities can be considered container orchestration and this is exactly what Kubernetes solves!

Source: https://www.slideshare.net/devopsdaysaustin/continuously-delivering-microservices-in-kubernetes-using-jenkins

Here we hae two services that each sit behind a load balancer provided and mapped by the Kuberenets cluster. -

-

Kubernetes components and architecture:

- Node - The physical machine or VM running a kubelet and container runtime.

- Control Plane - The container orchestration layer that exposes the API and interfaces to define, deploy, and manage the lifecycle of containers.

- Cluster - a set of nodes connected to the same control plane.

- Pod - single instance of an application, the smallest object in kubernetes.

Source: https://kubernetes.io/docs/concepts/overview/components/

Kubernetes control plane components:

- API server that nodes connect with and is the front end for users and administrators of the cluster.

- etcd keystore is a distributed store containing all data used to manage the cluster

- Scheduler that distributes work across nodes and assigns newly created containers to nodes

- Controllers that are the brain behind orchestration and monitors for nodes going down etc…

Kubernetes worker node components:

- container runtime - underlying runtime used to manage containers

- kubelet - agent that checks the health and manages the pods running on the node based on the desired state provided in the PodSpec

-

kube-proxy - network proxy that maintains network rules applied to nodes and allows network access between Pods in a cluster

You can scale up multiple pods on a single node until the node has no more resources, at which time a new node needs to be added and pod instances are distributed between the nodes.

- So how does this relate to Trino?

- Out of the box, Kubernetes can do these key things for Trino.

- Simple scale up and down (manually tell k8s to start or kill Trino pods).

- Kubernetes supports failover, meaning that your workers will restart if they die.

- Advanced jobs that could exist but not currently in open source.

- Auto-scaling via the Horizontal Pod Autoscaler and custom metrics.

- Graceful Shutdowns are hooks that you can add into your cluster that wait to shut down to avoid a failed call to a node that already shut down.

Source: https://learnk8s.io/graceful-shutdown

Source: https://learnk8s.io/graceful-shutdown

- What the heck are helm charts then?

- Helm is package manager for Kubernetes

- Removes the need for managing lots of Kubernetes related yaml files

- Best way to deploy apps to Kubernetes

- Charts are available for many different applications

- Helm chart for Trino

PR of the week: PR 11 Merge contributor version of k8s charts with the community version

This weeks PR of the week comes from a different repo under the trinodb org, trinodb/charts. This PR contains the merging from contributor Valeriano Manassero.

Valerino maintains a very useful helm chart, that started before the Trino org had defined our own community chart. This pull request effectively is trying to merge some useful features Valeriano added to his Trino helm chart so that it can be maintained in the community version.

Valeriano’s Trino Helm Chart: https://artifacthub.io/packages/helm/valeriano-manassero/trino

It hasn’t been merged yet but we are really looking forward to seeing this get merged in. Thanks Valeriano!

Demo: Running the Trino charts with kubectl

For this weeks demo, you need to install kubectl, minikube using the docker driver, and helm. You can find the trino helm chart on ArtifactHub at this URL.

https://artifacthub.io/packages/helm/trino/trino

First, start your minikube instance.

minikube start --driver=docker

Now take a quick look at the state of your k8s cluster.

kubectl get all

Add the template for the different trino catalogs on coordinators and workers.

kubectl apply -f - <<EOF

# Source: trino/templates/configmap-catalog.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: tcb-trino-catalog

labels:

app: trino

chart: trino-0.2.0

release: tcb

heritage: Helm

role: catalogs

data:

tpch.properties: |

connector.name=tpch

tpch.splits-per-node=4

tpcds.properties: |

connector.name=tpcds

tpcds.splits-per-node=4

EOF

Add the template for a single coordinator configuration.

kubectl apply -f - <<EOF

# Source: trino/templates/configmap-coordinator.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: tcb-trino-coordinator

labels:

app: trino

chart: trino-0.2.0

release: tcb

heritage: Helm

component: coordinator

data:

node.properties: |

node.environment=production

node.data-dir=/data/trino

plugin.dir=/usr/lib/trino/plugin

jvm.config: |

-server

-Xmx8G

-XX:+UseG1GC

-XX:G1HeapRegionSize=32M

-XX:+UseGCOverheadLimit

-XX:+ExplicitGCInvokesConcurrent

-XX:+HeapDumpOnOutOfMemoryError

-XX:+ExitOnOutOfMemoryError

-Djdk.attach.allowAttachSelf=true

-XX:-UseBiasedLocking

-XX:ReservedCodeCacheSize=512M

-XX:PerMethodRecompilationCutoff=10000

-XX:PerBytecodeRecompilationCutoff=10000

-Djdk.nio.maxCachedBufferSize=2000000

config.properties: |

coordinator=true

node-scheduler.include-coordinator=true

http-server.http.port=8080

query.max-memory=4GB

query.max-memory-per-node=1GB

query.max-total-memory-per-node=2GB

memory.heap-headroom-per-node=1GB

discovery-server.enabled=true

discovery.uri=http://localhost:8080

log.properties: |

io.trino=INFO

EOF

Add the tcb-trino service definition to run Trino.

kubectl apply -f - <<EOF

# Source: trino/templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: tcb-trino

labels:

app: trino

chart: trino-0.2.0

release: tcb

heritage: Helm

spec:

type: ClusterIP

ports:

- port: 8080

targetPort: http

protocol: TCP

name: http

selector:

app: trino

release: tcb

component: coordinator

EOF

Add the deployment definition for the service.

kubectl apply -f - <<EOF

# Source: trino/templates/deployment-coordinator.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: tcb-trino-coordinator

labels:

app: trino

chart: trino-0.2.0

release: tcb

heritage: Helm

component: coordinator

spec:

selector:

matchLabels:

app: trino

release: tcb

component: coordinator

template:

metadata:

labels:

app: trino

release: tcb

component: coordinator

spec:

securityContext:

runAsUser: 1000

runAsGroup: 1000

volumes:

- name: config-volume

configMap:

name: tcb-trino-coordinator

- name: catalog-volume

configMap:

name: tcb-trino-catalog

imagePullSecrets:

- name: registry-credentials

containers:

- name: trino-coordinator

image: "trinodb/trino:latest"

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /etc/trino

name: config-volume

- mountPath: /etc/trino/catalog

name: catalog-volume

ports:

- name: http

containerPort: 8080

protocol: TCP

livenessProbe:

httpGet:

path: /v1/info

port: http

readinessProbe:

httpGet:

path: /v1/info

port: http

resources:

{}

EOF

Now check the state of the k8s cluster again.

kubectl get all

Run the following command to expose the url and port to the localhost system.

minikube service tcb-trino --url

Clean up all the resources.

kubectl delete pod --all

kubectl delete replicaset --all

kubectl delete service tcb-trino

kubectl delete deployment tcb-trino-coordinator

kubectl delete configmap --all

Now you can run the same demo using the helm chart which includes all of these templates out-of-the-box. First add the trino helm chart, check the templates that are produced by helm, and run the install.

# HELM DEMO

helm repo add trino https://trinodb.github.io/charts/

helm template tcb trino/trino --version 0.2.0

helm install tcb trino/trino --version 0.2.0

Now that it’s installed, run the same command to expose the url of the service.

minikube service tcb-trino --url

Clean up all the resources.

minikube delete

helm repo remove trino

Events, news, and various links

Trino Summit is moving to 100% virtual: register here.

Trino Meetup groups

- Virtual

- East Coast (US)

- West Coast (US)

- Mid West (US)

If you want to learn more about Trino, check out the definitive guide from OReilly. You can download the free PDF or buy the book online.

Music for the show is from the Megaman 6 Game Play album by Krzysztof Słowikowski.