Video

- Concept of the week: LakeFS and Git on Object Storage (546s)

- Demo of the week: Running Trino on LakeFS (2445s)

- PR of the week: PR 8762 Add query error info to cluster overview page in web UI (4271s)

- Question of the week: Why are deletes so limited in Trino? (4454s)

Audio

Guests

- Paul Singman, Developer Advocate at Treeverse (@datawhisp).

Trino Summit 2021

Get ready for Trino Summit, coming October 21st and 22nd! This annual Trino community event is where we gather practitioners that deploy Trino at scale, and share their experiences and best practices with the rest of the community. While the planning for this event was a bit chaotic due to the pandemic, we have made the final decision to host the event virtually for the safety of all the attendees. We look forward to seeing you there, and can’t wait to share more information in the coming weeks!

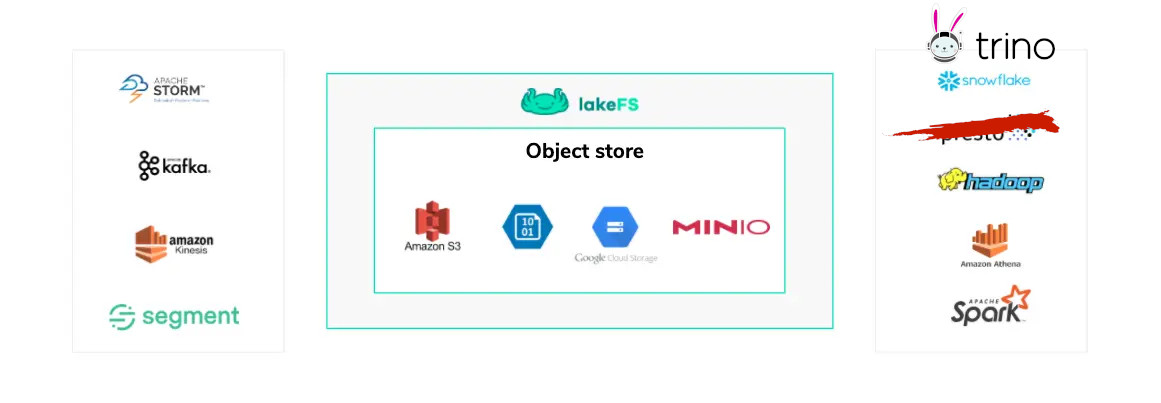

Concept of the week: LakeFS and Git on object storage

LakeFS offers git-like semantics over your files in the data lake. Akin to the versioning you can do on Iceberg, you can also version your data with LakeFS, and roll back to previous commits when you make a mistake. LakeFS allows you to roll out new features in production or prod-like environments with ease and isolation from the real data. Join us as we dive into this awesome new way to approach versioning on your data!

Why we built LakeFS: Source.

Features

- Exabytes scale version control

- Git-like operations: branch, commit, merge, revert

- Zero copy branching for frictionless experiments

- Full reproducibility of data and code

- Pre-commit/merge hooks for data CI/CD

- Instantly revert changes to data

Use cases

In development

- Experiment - try new tools, upgrade versions, and evaluate code changes in isolation. By creating a branch of the data you get an isolated snapshot to run experiments over, while others are not exposed. Compare between branches with different experiments or to the main branch of the repository to understand a change’s impact.

- Debug - checkout specific commits in a repository’s commit history to materialize consistent, historical versions of your data. See the exact state of your data at the point-in-time of an error to understand its root cause.

- Collaborate - avoid managing data access at the two extremes of either treating your data lake like a shared folder or creating multiple copies of the data to safely collaborate. Instead, leverage isolated branches managed by metadata (not copies of files) to work in parallel.

During deployment

- Version Control - deploy data safely with CI/CD workflows borrowed from software engineering best practices. Ingest new data onto an isolated branch, perform data validations, then add to production through a merge operation.

- Test - define pre-merge and pre-commit hooks to run tests that enforce schema and validate properties of the data to catch issues before they reach production.

In production

- Roll back - recover from errors by instantly reverting data to a former, consistent snapshot of the data lake. Choose any commit in a repository’s commit history to revert in one atomic action.

- Troubleshoot - investigate production errors by starting with a snapshot of the inputs to the failed process. Spend less time re-creating the state of datasets at the time of failure, and more time finding the solution.

- Cross-collection consistency - provide consumers multiple synchronized collections of data in one atomic, revertable action. Using branches, writers provide consistency guarantees across different logical collections - merging to the main branch only after all relevant datasets have been created or updated successfully.

Source: https://docs.lakefs.io/#use-cases

Demo of the week: Running Trino on LakeFS

In order to run Trino and LakeFS, you need Docker installed on your system with at least 4GB of memory allocated to Docker.

Let’s start up the LakeFS instance and the required PostgreSQL instance along

with the typical Trino containers used with the Hive connector.

Clone the trino-getting-started repository and navigate to the

community_tutorials/lakefs/trino-lakefs-minio/ directory.

git clone [email protected]:bitsondatadev/trino-getting-started.git

cd community_tutorials/lakefs/trino-lakefs-minio/

docker-compose up -d

Once this is done, you can navigate to the following locations to verify that everything started correctly.

- Navigate to http://localhost:8000 to open the LakeFS user interface.

- Log in with Access Key,

AKIAIOSFODNN7EXAMPLE, and Secret Access Key,wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY. - Verify that the

examplerepository exists in the UI and open it. - The branch

mainin the repository, found underexample/main/, should be empty.

Once you have verified the repository exists, let’s go ahead and create a schema

under the Trino Hive catalog called minio that was pointing to minio but is

now wrapped by LakeFS to add the git-like layer around the file storage.

Name the schema tiny as that is the schema we copy from the TPCH data set.

Notice the location property of the schema. It now has a namespace that is

prefixed before the actual tiny/ table directory. The prefix contains the

repository name, then the branch name. All together this follows the pattern of

<protocol>://<repository>/<branch>/<schema>/.

CREATE SCHEMA minio.tiny

WITH (location = 's3a://example/main/tiny');

Now, create two tables, customer and orders by setting external_location

using the same namespace used in the schema and adding the table name. The query

retrieves the data from the tiny TPCH data set.

CREATE TABLE minio.tiny.customer

WITH (

format = 'ORC',

external_location = 's3a://example/main/tiny/customer/'

)

AS SELECT * FROM tpch.tiny.customer;

CREATE TABLE minio.tiny.orders

WITH (

format = 'ORC',

external_location = 's3a://example/main/tiny/orders/'

)

AS SELECT * FROM tpch.tiny.orders;

Verify that you can see the table directories in LakeFS once they exist. http://localhost:8000/repositories/example/objects?ref=main&path=tiny%2F

Run a query on these two tables using the standard table pointing to the main

branch.

SELECT ORDERKEY, ORDERDATE, SHIPPRIORITY

FROM minio.tiny.customer c, minio.tiny.orders o

WHERE MKTSEGMENT = 'BUILDING' AND c.CUSTKEY = o.CUSTKEY AND

ORDERDATE < date'1995-03-15'

GROUP BY ORDERKEY, ORDERDATE, SHIPPRIORITY

ORDER BY ORDERDATE;

Open the LakeFS UI again and click on the Unversioned Changes tab. Click Commit Changes. Type a commit message on the popup and click Commit Changes.

Once the changes are commited on branch main, click on the Branches tab.

Click Create Branch. Name a new branch sandbox that branches off of the

main branch. Now click Create.

Although there is a branch that exists called sandbox, this only exists

logically. We need to make Trino aware by adding another schema and tables

that point to the new branch. Do this by making a new schema called

tiny_sandbox and changing the location property to point to the sandbox

branch instead of the main branch.

CREATE SCHEMA minio.tiny_sandbox

WITH (location = 's3a://example/sandbox/tiny');

Once the tiny_sandbox schema exists, we can copy the table definitions

of the customer and orders table from the original tables created. We got

the schema for free by copying it directly from the TPCH data using the CTAS

statement. We don’t want to use CTAS in this case as it not only copies the

table definition, but also the data. This duplication of data is unnecessary and

is what creating a branch in LakeFS avoids. We want to just copy the table

definition using the SHOW CREATE TABLE statement.

SHOW CREATE TABLE minio.tiny.customer;

SHOW CREATE TABLE minio.tiny.orders;

Take the output and update the schema to tiny_sandbox and external_location

to point to sandbox for both tables.

CREATE TABLE minio.tiny_sandbox.customer (

custkey bigint,

name varchar(25),

address varchar(40),

nationkey bigint,

phone varchar(15),

acctbal double,

mktsegment varchar(10),

comment varchar(117)

)

WITH (

external_location = 's3a://example/sandbox/tiny/customer',

format = 'ORC'

);

CREATE TABLE minio.tiny_sandbox.orders (

orderkey bigint,

custkey bigint,

orderstatus varchar(1),

totalprice double,

orderdate date,

orderpriority varchar(15),

clerk varchar(15),

shippriority integer,

comment varchar(79)

)

WITH (

external_location = 's3a://example/sandbox/tiny/orders',

format = 'ORC'

);

Once these table definitions exist, go ahead and run the same query as before,

but update using the tiny_sandbox schema instead of the tiny schema.

SELECT ORDERKEY, ORDERDATE, SHIPPRIORITY

FROM minio.tiny_sandbox.customer c, minio.tiny_sandbox.orders o

WHERE MKTSEGMENT = 'BUILDING' AND c.CUSTKEY = o.CUSTKEY AND

ORDERDATE < date'1995-03-15'

ORDER BY ORDERDATE;

One last bit of functionality we want to test is the merging capabilities. To

do this, create a table called lineitem in the sandbox branch using a CTAS

statement.

CREATE TABLE minio.tiny_sandbox.lineitem

WITH (

format = 'ORC',

external_location = 's3a://example/sandbox/tiny/lineitem/'

)

AS SELECT * FROM tpch.tiny.lineitem;

Verify that you can see three table directories in LakeFS including lineitem

in the sandbox branch.

http://localhost:8000/repositories/example/objects?ref=sandbox&path=tiny%2F

Verify that you do not see lineitem in the table directories in LakeFS in the

main branch.

http://localhost:8000/repositories/example/objects?ref=main&path=tiny%2F

You can also verify this by running queries against lineitem in the tables

pointing to the sandbox branch that should fail on the tables pointing to the

main branch.

To merge the new table lineitem to show up in the main branch, first commit

the new change to sandbox by again going to Unversioned Changes tab.

Click Commit Changes. Type a commit message on the popup and click

Commit Changes.

Once the lineitem add is committed, click on the Compare tab. Set the

base branch to main and the compared to branch to sandbox. You should see

the addition of a line item show up in the diff view. Click Merge and click

Yes.

Once this is merged you should see the table data show up in LakeFS. Verify that

you can see lineitem in the table directories in LakeFS in the main branch.

http://localhost:8000/repositories/example/objects?ref=main&path=tiny%2F

As before, we won’t be able to query this data from Trino until we run the

SHOW CREATE TABLE from the tiny_sandbox schema and use the output to create

the table in the tiny schema that is pointing to main.

PR of the week: PR 8762 Add query error info to cluster overview page in web UI

The PR of the week adds some really useful context around query failures in the Trino Web UI. This PR was created by Pádraig O’Sullivan . For many, it can be fustrating when a query fails and you have to do a lot of digging before you understand even the type of error that is happening.This PR gives a better highlight of what failed so that you don’t have to do a lot of investigation upfront to get a sense of what is happening and where to look next.

Thank you so much Pádraig!

Question of the week: Why are deletes so limited in Trino?

Our question of the week

comes from Marius Grama on our Trino community Slack. Marius created the

dbt-trino adapter

and wants to implement INSERT OVERWRITE functionality.

INSERT OVERWRITE checks whether there are entries in the target table that

exist as well in the staging table, and it first deletes the target entries,

before inserting the staging entries. Unfortunately the delete didn’t work for

RDBMS, Hive, or Iceberg. His questionis if this is a limitation of Trino for

all connectors, and how we can approach the “delete” part of INSERT OVERWRITE

Events, news, and various links

Blogs and Resources

- Hive Metastore - Why its still here and what can replace it

- Hive Metastore - It didn’t age well

- Hudi, Iceberg, Delta Lake Table Formats Compared

- The Docker Everything Bagel

Trino Meetup groups

- Virtual

- East Coast (US)

- West Coast (US)

- Mid West (US)

If you want to learn more about Trino, check out the definitive guide from OReilly. You can download the free PDF or buy the book online.

Music for the show is from the Megaman 6 Game Play album by Krzysztof Słowikowski.